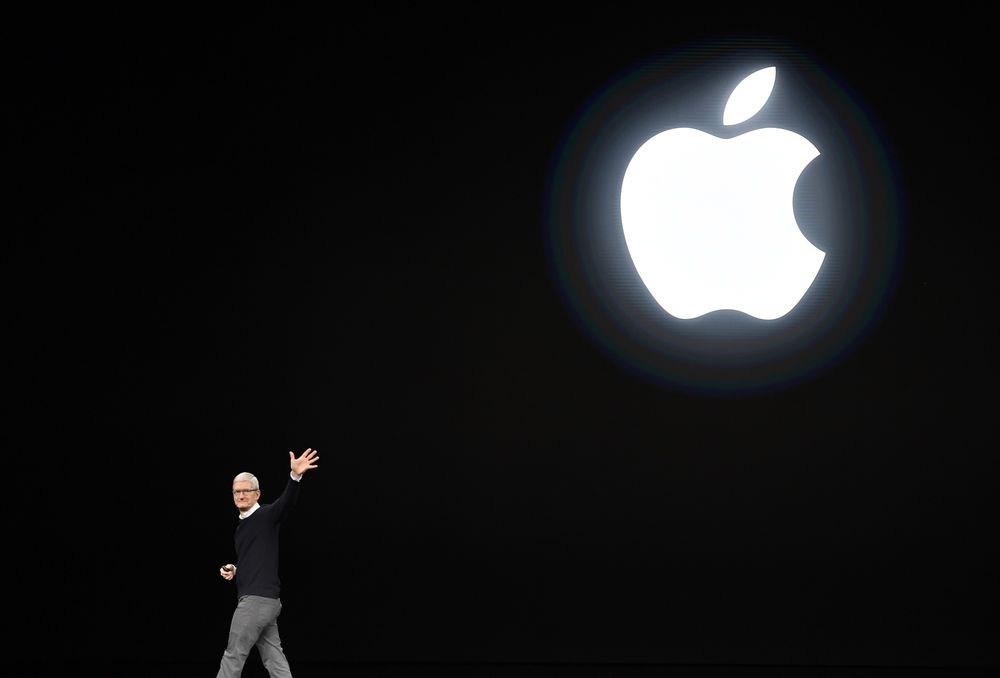

AFTER MAJOR TECHGIANTS LIKE GOOGLE, FACEBOOK AND MICROSOFT, APPLE’s iCloud HAS NOW ANNOUNCED A SYSTEM TO DETECT CHILD SEXUAL ABUSE MATERIAL IN DEVICES BASED IN US.

Apple on Thursday announced their decision to implement a system to check whether the uploads comply to the guidelines in the States. The database of US National Center of Missing and Exploited Children (NCMEC) and other child safety organizations will be used. The computer system made to detect known abuse images will be called as NeuralMatch. While unveiling their plans for iOS 15 and iPadOS 15, they talked about this tool. There are rumors that Apple plans to even scan users’ encrypted chats for child safety.

NGOs working for Child Safety and Rights have appreciated the steps taken by Apple Inc. towards child safety and protection. Significantly, Apple previously has been under pressure from the US governments due to their strict privacy policies.

HOW DOES THE TOOL WORK?

Before uploading an image on iCloud, the on-device system will run a matching process by turning the images into hashes. Then comparing with known CSAM hashes. The known Child Sexual Abuse Material (CSAM) will be fetched from various child safety organizations. In case, the image fails the test performed by NeuralMatch, the trillion-dollar company will do a manual review of each report to confirm whether the tool was accurate or not. The company claims the system to have extremely high level of accuracy. Along with the false positive cases below one in a trillion.

In case, the user’s upload fails even in a manual review. They plan to take actions against those users including disabling accounts and reporting to the authorities.

Moreover, Apple as of now plans only to scan iCloud uploads and not users’ personal libraries storing data locally. They also told, if users feel that their accounts have been unlawfully locked can appeal for unlocking of the accounts.

PRIVACY CONCERNS

Several civil liberties groups have raised concerns over Apple’s tool. They called it a betrayal of users’ trust on the company’s privacy policy. Some cybersecurity experts also cautioned that this could lead to scanning of devices generally by governments to shut political dissents. Also, this tool would make computer researchers impossible to check whether Apple is obeying to the pre-decided usage of the tool or not. Matthew Green, a security researcher at Johns Hopkins University said, ”Whether they turn out to be right or wrong on that point hardly matters. This will break the dam- governments will demand it from everyone.”

Though the company says that this new tool offers ample amount of privacy to the users and only cross-checks for CSAM. But there always exists a back-door for iCloud compromise on the users’ privacy when the authorities want.

Also, Read| Pad Squad In Your Area: Dzongu Chapter